Why I’m worried about the FaceID feature of the new iPhone X

It’s a scenario I have given a lot of thought as I’m quite a fan of science fiction and I love dystopian science fiction movies, but it’s getting real and it’s getting real very fast. As many others, I tuned in to the live stream of the iPhone event on Tuesday the 13th of September. Here’s a link to the recording. One of the main and most impressive feature is Face ID. Some would say that Apple was trying to get rid of the home button on the front of the phone and therefore developed Face ID, but I’m convinced that it was the other way around. Face ID is Apple’s big bet and authentication is a by-product. We can always expect upgrades of specifications, such as CPU, memory and camera, but this keynote was all about the technology behind Face ID as seen in the Animoji demo.

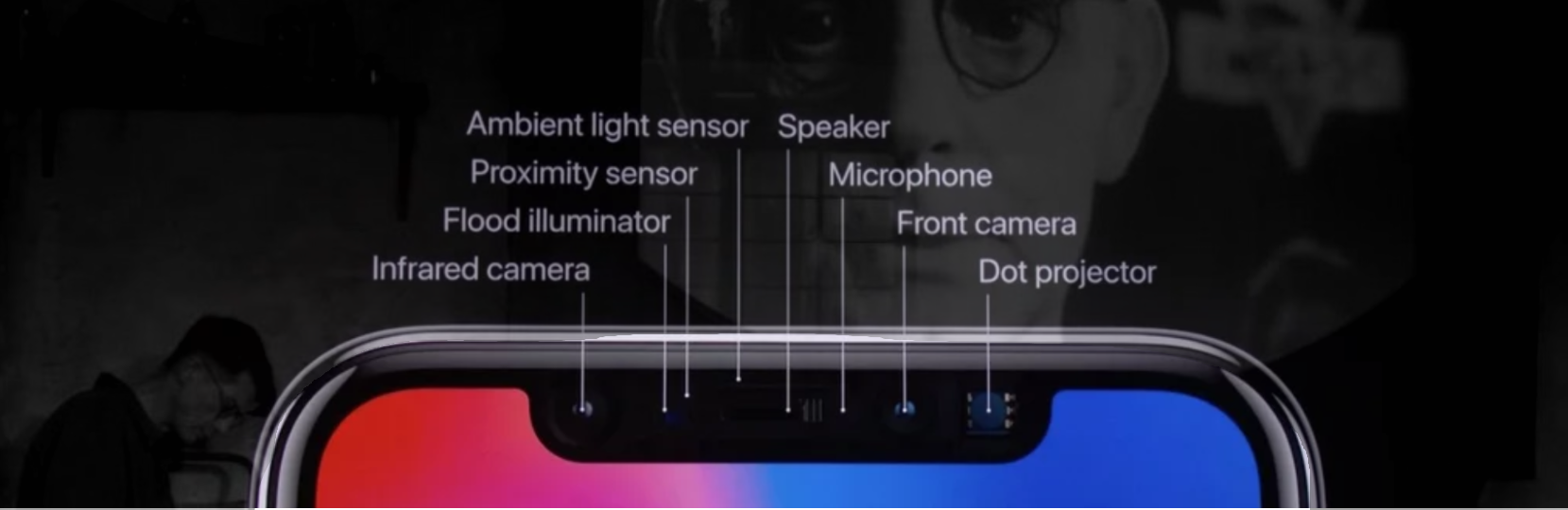

The combination of multiple sensors, the camera and a dot projector are used to create a very detailed mapping of your face. This information is used to change the facial expression of animojis and thereby animate them. Apple also gave a demo of Snapchat filters which was a lot more detailed and the mesh created is visible to you by making use of augmented reality. The question is, how much info is given to Snapchat?

Apple’s big bet is on this technology and I can’t blame them. They presented the new iPhones as a big step in the evolution for smartphones by comparing this to the introduction of the first iPhone 10 years ago. Apple revolutionized the industry by introducing the multi touch feature and now Face ID is finally bridging the gap with regard to our connection to the phone as it gives the phone very detailed information to work with. Microsoft introduced similar technology with Windows Hello, but we’re spending a lot more time with our phones as consumers and Apple has a huge community of developers that can’t wait to make use of this technology. This is why the new iPhone X is indeed the next step in the evolution of smartphones.

Apple reassured everyone that the data is encrypted and processed locally, but this seems to only be related to the authentication feature. They are not making use of cloud services to enable the Face ID authentication, but as you can see above, the data can be used in apps such as Snapchat. I’m not worried about the Snapchat videos or the many animojis people will create. I’m worried about the data being shared with Apple and the companies building the apps. A lot of work has been done in this field and we rarely get to see the potential of this technology as big companies such as Apple acquire these companies and limit the amount of information we can get about them. The video below shows how similar tech is used in the detective game L.A. Noire (Rockstar Games).

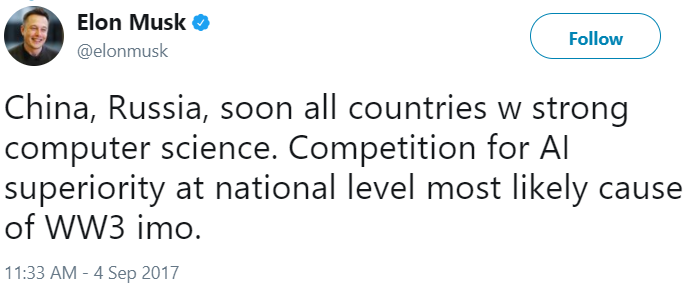

An important part of the game is reading someone’s face and thereby their emotions and figuring out if they are being deceitful or holding back information. This was back in 2011 and it was quite a big step for games. Now imagine 6 years in which technology has advanced and take into account the impact of AI & machine learning. Apple is opening a door and soon companies such as Facebook will try to benefit from this. Here’s an article that has had a huge and negative impact on how I look at Facebook (link).

Think we can agree that doing something “for science” doesn’t mean it’s OK and there is no safeguard from future unethical research projects. Facebook has introduced the reaction feature a while back, now imagine them rolling out an update and asking you to confirm a certain reaction. I’m sure most people won’t mind if something saves them from having to interact with their phone by touching the screen, but this has bigger implications as we’re not aware how they are utilizing the data. We only see the app. Most people will happily sign up for control of the interface by using only our mind.

The movie HER was a nice example of what we can expect in the future. It’s a bit of a stretch as he develops feelings for an operating system, but the most interesting part of this movie is the fact that the operating system can read his emotions and react to them.

It won’t take long before developers of the framework and the apps realize experiences that take into account the smallest changes in our facial expressions which are called micro expressions. It’s not about us having a conversation with our phone and our phone figuring out if we’re telling the truth, it’s about experiences built around our state of mind and using this information for something we deem unethical. Imagine Facebook’s “research” team figuring out how we react unconsciously to the information and ads shown to us via the feed and think about the risks associated with this technology.

Most people are totally OK with uploading pictures to Facebook and allowing Facebook’s facial recognition software to create a profile of their face. The pictures you share of your friends who might not even have a Facebook account is allowing the company to create a profile of their face as well. When did they sign up for something like that? This technology helps Facebook to identify people in pictures and it saves time when you want to tag your friends in them. All it needs now is a click. It limits the amount of interaction needed, thereby gives us something back and that makes it OK for most of us. We’re collectively embracing any update given to us as long as it gives us some value and we’ll collectively wait until something goes horribly wrong. Companies can get hacked and there is no guarantee that deleting your profile also deletes this data. You also have to keep in mind that our phones will gain the ability to scan the people around us and this won’t be limited to the moment when we unlock our phones.

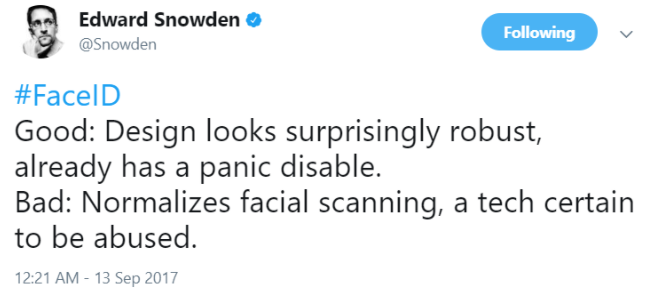

A lot of people are concerned about how secure Face ID is. I’m not too worried about someone creating a 3D model of my head to unlock my phone, it’s all about the data and the fact that it could take years or even longer before we find out that abuse has taken place. We’re living in an exciting time with regard to technological advancements, but it’s also quite scary as we haven’t yet put anything in place to protect us from abuse.

I love to think about the amazing possibilities and I don’t think that we should completely halt technological progress in this area, but our options here are not binary. We need to be very critical of not only companies building the framework, but also the companies developing the apps. We’re now used to using our fingerprint to unlock our phones and removing this option on the iPhone X means that most if not all iPhone X users will make use of Face ID. We don’t want to be bothered with remembering a code and I understand that people are excited about the next iPhone as it has excited me too.

Millenials can’t wait to start using the animojis and we’ll be happy to install an app that takes into account our current mood to present more relevant suggestions. It’s scary to consider that we can’t prevent abuse and that we will figure it out years after it has taken place and by then it could already be too late.

Apple has acquired several facial-recognition-AI companies in the last few years (RealFace, PrimeSense and LinX) and they also acquired Emotient in 2016. This is how Emotient described their own technology back in 2011:

“Emotient’s proprietary algorithms make it possible to discern the most subtle expression or changes in expression and translate that into a defined emotional reaction. With a camera-enabled device or external webcam, our system can quickly process facial detection and automated expression analysis in real-time, or, for non-time-sensitive requirements, it can scan images and videos in batch mode to deliver in-depth analysis of single-subject and multiple-subject videos. FACET leverages machine learning in large datasets in order to achieve optimal speed and utility.”

Emotient’s algorithms are based on the work of Paul Ekman, Ph.D. A pioneer in the study of emotions and facial expressions and a professor emeritus of psychology in the Department of Psychiatry at the University of California Medical School. Paul Ekman has been active in this field for 32 years. I understand that people are mostly talking about the Face ID fail as they need this to be reliable now that Apple has decided to remove Touch ID from their flagship product, but authentication is only part of the equation and it feels like not enough people care about other implications. Here’s an interesting video Paul Ekman talking about emotions and how we can control them.

Let’s zoom in on the last part (6:52)

“Pay close attention to the other person’s facial expressions, cause they’re the recipient of your emotions. You can’t see your own face, they see it, but you can see their face and you can tell how they’re reacting”.

“The changes within your body, changes in your musculature. in your respiration, in your sweating, in the temperature of the different parts of your body. They are different we found for different emotions”.

Now think about the other person being your phone, having full control over what you get to see and being able to measure all the variables associated with our emotions. Here’s an interesting video about micro expressions from the centre of body language: